Matthew Maxwell: ‘The Lovelace Test: Abstract Art in the Era of Synthetic Reality’

After 25 years as a creative director in the business of interaction, Matthew Maxwell has returned to full-time education at Middlesex University, London. His mixed-mode MPhil/PhD research is looking at the influence of Generative Artificial Intelligence (GAI) on artistic creativity. It aims to investigate the potential of employing GAI applications as innovative interlocutors within a contemporary art practice; exploring how concepts of authorship, originality, and the interplay between synthetic and organic intelligence in the creative journey can be reshaped through AI’s involvement.

For anyone interested in abstract art, the United States in the 1950s and 1960s was a fine time and place to be alive. Post-war America was rolling in cash and all manner of esoteric experiments were possible. Timothy Leary was dosing Harvard Psychology students with LSD. Kerouac and Ginsberg were celebrating madness. Pop music discovered rhythm. Everyone was growing their hair. The subjective, emotional experience that characterised Abstract Expressionism was suddenly a legitimate subject of study – not just in the arts but across the humanities and sciences. Mainframe computers were no longer simply the stuff of science fiction, but the mainstays of science departments. It was a groovy, interdisciplinary time. Art, Psychology, and Engineering came together in a startling, tangled commingling, in what subsequently became known as The Cognitive Revolution.

The study of the mind, the artistic depiction of mental states, and a growing understanding of how important computing technology would become, all occurred at roughly the same time. While cognitive scientists like Chomsky and Kahnemann were exploring language and the ‘active agency’ of consciousness, the Abstract Expressionists (Pollock, Rothko et al.) were developing fresh ways to represent emotion and the experience of being alive – of being sentient. At the same time, the idea that ‘calculating machines’ could be designed to think like humans was gathering pace.

The ‘Cognitive Revolution’ that spanned two decades embraced cross-disciplinary thinking; during this period three intellectual avenues, psychology, computing and painting, began to influence and align with each other. New ideas of how ‘thinking’ happens affected how computers were designed – maybe they could do more than just crunch numbers and crack codes. Ontological issues of existence and reality, now no longer exclusively human-centric ideas, seemed to find their natural expression in the rhythms, drips and vibrations of the New York school.

That was half a century ago.

Now, in 2023, I believe we are entering a new era of inflection; where disciplines that have diverged are once more bleeding into each other. In the past 12 months we’ve witnessed a tsunami of AI-powered art. Readily accessible and requiring almost zero technical knowledge, AI applications, trained on millions of examples of visual art from all cultures and eras (more than any single human could ever see, let alone remember) are flooding the digital world with imagery, text, video and music.

How does this impact ideas of authorship? Who is making these works – the AI with the almost limitless vocabulary of imagery – or the human agent, pressing the buttons, prompting the stimuli, selecting the winners? What about the craft skills that an artist can take a lifetime to acquire? Do they still have any value? Or are these synthetic neural networks, with their apparently superhuman capabilities, simply new tools which democratise the creative process, making the imagination and the awareness of inner states more accessible, and to more people? What does it mean for a) our understanding of subjective emotion and memory, and b) the artistic or creative processes we use to capture and communicate that understanding?

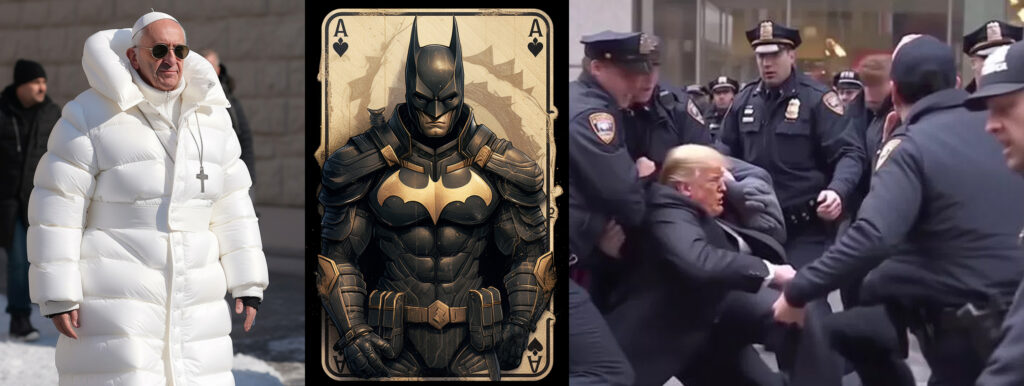

Pope in a Puffer

For all its apocalyptic reputation, much of the generative AI imagery we’re seeing doesn’t really look very radical at all.

The Pope in a stylish overcoat. Superheroes, fairies and elves. Some malicious (or satirical) celebrity fakery. The detail can be ingenious, but is this Creation? Or is it just Generation? It’s an important question, because our ‘creativity’ is one of the things that we humans cling to as being at the heart of our uniqueness. It’s always unsettling when something central to one’s sense of self suddenly appears to be not so special or unique after all. The cognitive discomfort of having to revise our bedrock schema can be tough. Especially when we’re reassessing our relationship with technology; AI in particular. If we can’t be sure what makes us, us, how can we be sure that AI is not us, also? Boundaries are important cognitive blocks. Without them, we lose sight of where we stand. And if our species’ ability to invent, synthesise, and manifest is so important (and to artists it’s pretty central), perhaps we need to define more closely what we mean by ‘creativity’.

Four Perspectives on Creativity

In a 2023 article ‘Creative encounters of a posthuman kind – anthropocentric law, artificial intelligence, and art’, two Lithuanian scholars, Kalpokiene and Kalpokas1https://www-sciencedirect-com.ezproxy.mdx.ac.uk/science/article/pii/S0160791X23000027?via%3Dihub#sec2 usefully identify several ways to look at ‘creativity’:

The person perspective. This asks whether the creative agent (human or not) possesses the attributes necessary for being creative. So, what are those attributes? Typically, we’ll fall back on cognitive qualities – and especially the least measurable ones: aesthetic pleasure, love, anxiety, spirituality, and so on. But also the higher capabilities; memory, language, and other phenomena of the mind. These are the primary drivers that distinguish the human artist from the nest-building sparrow or the car-assembly robot. It’s a question of being the ‘right’ kind of agent. Do we have any hard and fast rules as to what constitutes who or what can or cannot be a ‘creative agent’? Precedents abound where the creative process is not a singular individual’s psyche, emotional condition, or expressive point of view’2M. Mazzone, A. Elgammal Art, creativity, and the potential of artificial intelligence, Arts, 8 (1) (2019), pp. 1-9 but rather the output of a collective effort involving many hands and diverse specialisms. The beautiful abstractions of Islamic architecture are not the creation of a single genius, but of many artisans, financiers, and spiritual advisors, to name a few. Indeed, their collective intelligences, meeting to solve a specific absence, could be seen as a close analogy to the way that AI systems work – accelerating knowledge transfer through determinate but flexible protocols.

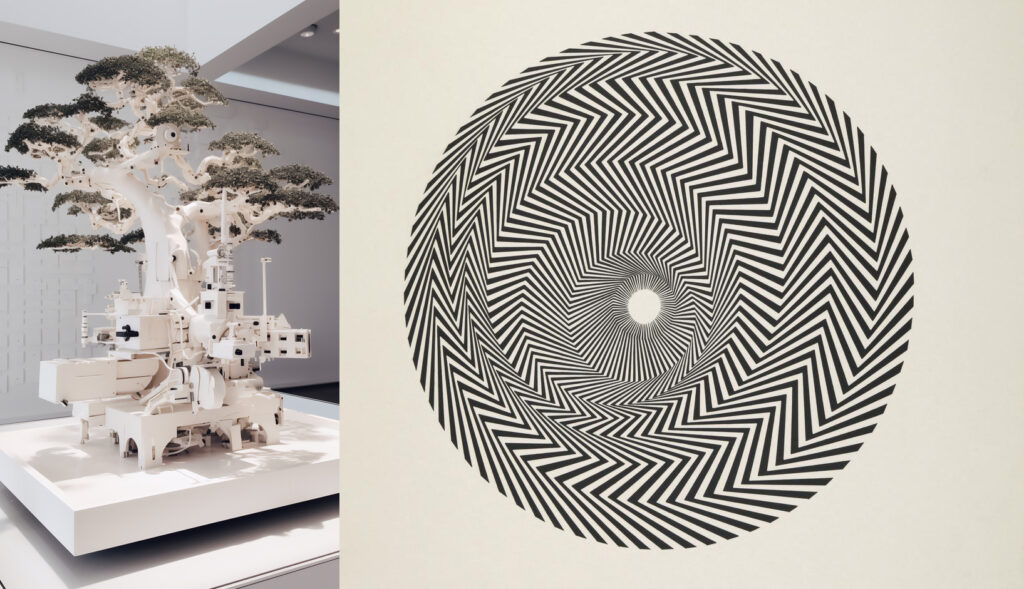

In the spirit of collaboration I asked MidJourney to involve me in the process, blending three images: The original above, with two added stylistic suggestions: an image of my own, from an AI generated series on trees, and Bridget Riley’s painting, ‘Blaze’ of 1964.

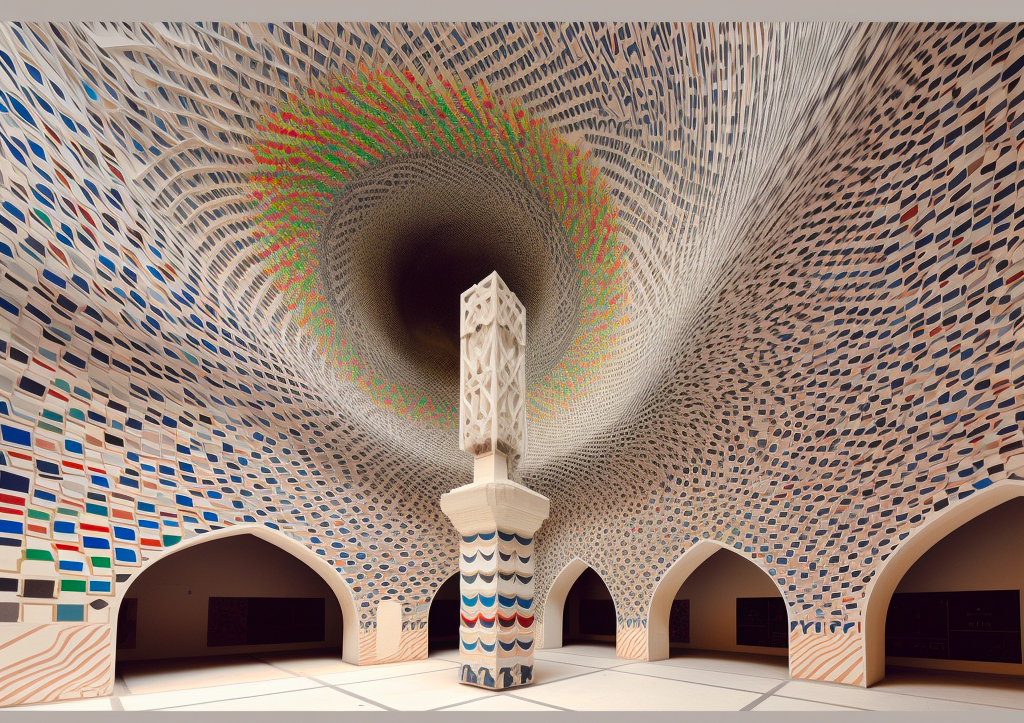

The results are below. Do these pass the Person perspective? The creative agents are multiple – not only the makers of the mosque, Riley herself, and my own contribution, but also all the other relevant references the AI is familiar with. Which number millions. It takes my catalytic suggestions, scans human culture, and produces variations. In these variations, the columns have become subway pillars, with coloured signage, and Mondrian-ish coloured tiles that could be from any public transport system anywhere in the world.

In another variant, the Arabic arches begin to flatten. The ceiling forms a whirling concavity – closer to the Riley example. The AI agent has taken the classic domical architecture and added an organic fluidity all its own. The coloured tiles more irregular now. The rhythmic patterns looser. But that statuary in the middle is disturbing. Where has that come from? The AI has made that decision. And drawing from its library of forms, suggests an addition which is novel, elegant, and contextually plausible.

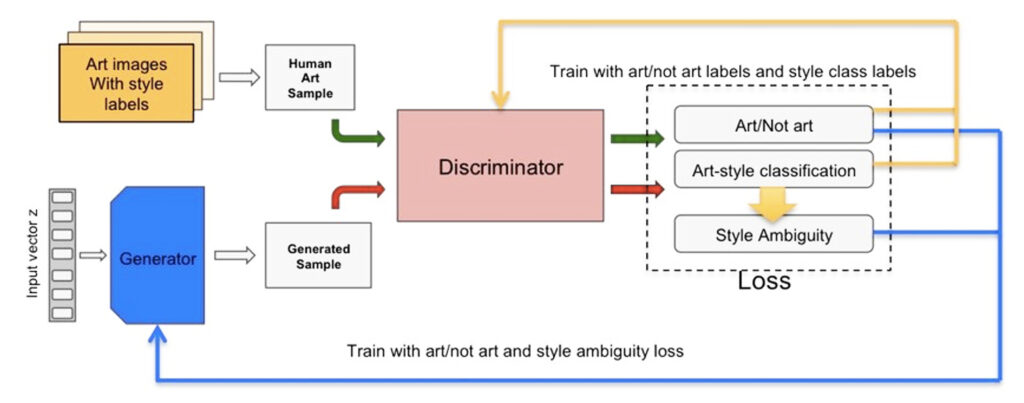

Kalpokiene and Kalpokas’ second criterion is the process perspective. They ask whether the actions taken to produce the work amount to creativity. Instead of focusing on the creator’s attributes, this perspective analyses how the work is produced. Here we need to take a closer look at how AI actually works, and again, we find similarities with human creators. Generative Adversarial Networks (GANs) work as creative partners in a way which feels very familiar. Two neural networks are paired. One, the Generator, creates new material (like the painter applying paint to canvas). The second neural network, the Discriminator, observes the new material and decides what to retain (true) and what to remove (false). (The painter decides which marks to retain and which to improve.) The Generator learns from this and works to increase the True data and reduce the False, until an equilibrium between the two networks is reached. Here’s how Google3https://developers.google.com/machine-learning/gan/gan_structure, accessed 12/06/23 explains this process. It’s an ‘adverserial’ method that will be familiar to most creators, be they painting, song writing, costume design, or any artistic endeavour; the progressive elimination of everything which doesn’t belong, according to criteria housed somewhere in the artist’s mysterious body of experience, memory, and expectation.

This competitive learning collaboration can go even further, a Creative Adversarial Network (CAN) is specifically designed with a view towards ‘maximizing deviation from established styles and minimizing deviation from art distribution .This is achieved by modifying the principle of competition: one algorithm is tasked with following the pre-existing aesthetic tradition as gauged from the training set while the other penalises output too similar to existing styles, leading, in combination, to novel artistic output that is, nevertheless, steeped in tradition – all without human curation.‘4A. Elgammal, et al. https://arxiv.org/abs/1706.07068

As Kalpokiene and Kalpokas point out, it’s a narrow way of thinking to assert that the process of artificial creativity somehow disqualifies its results. Surely it is the product– the end result – that justifies the means of production. Do we quibble over Vermeer’s (probable) use of a camera obscura and other optical devices to achieve his results? Or Jackson Pollock’s use of poured paint instead of traditional brushwork? Is whatever value the work of Koons or Hirst could be argued to possess, invalidated or diminished by their extensive use of fabricators?

Thirdly, the product perspective attempts to bypass these issues by focusing on the formal characteristics of the work to validate its creativity; to evaluate a work of art in terms of its novelty, value, aesthetic qualities, and other supposedly internal characteristics. This is immediately problematic. The history of human creativity is full of ground-breaking works of art, initially dismissed. While posterity now looks kindly on Van Gogh, his contemporaries did so with pity. One consequence of the Cognitive Revolution was a general loss of certainty around objective criteria to determine value. Liberal Humanism, of the kind the Cognitivists did so much to popularise, asserts the validity, if not primacy, of the individual experience. Subjective responses offered more freedom to explore the workings of the mind, unrestrained by rules and metrics. But the consequence is, we have lost a set of measures that might have useful now we’re trying to distinguish between Organic and Synthetic entities.

Perhaps we need an equivalent of the Turing Test. In the famous experiment (originally, the Imitation Game) proposed by Alan Turing in 1950, if the machine can persuade a human they are speaking with another human, the machine passes the test. In our era of Generative AI, Marcus de Sautoy suggests a further test – one that assesses a capacity for creativity. He calls it the ‘Lovelace Test’ after Ada Lovelace, the 19th century English Mathematician, daughter of the ‘mad, bad and dangerous to know’ Lord Byron, and pioneer of computer programming. Lovelace built on the work of Charles Babbage’s calculating machine by beginning to consider what else the machine might be capable of. To pass the Lovelace Test, an algorithm must originate a creative work of art, such that the process is repeatable (i.e. it isn’t the result of a hardware error) and yet the programmer is unable to explain how the algorithm produced its output. As de Sautoy points out, Lovelace ‘perfectly encapsulates, you need a bit of Byron as much as Babbage.’

When ChatGPT and its equivalents make mistakes, they are referred to as ‘Hallucinations’. For example, when I asked it to list five examples of abstract art that emerged in the 1920s, number three on the list was ‘No. 61 (Rust and Blue)’ by Mark Rothko (1928). It took me a second to spot the error, because the slickness, grammatical precision, and speed of response can make blatantly wrong answers seem plausible. But what is a flaw in responses that require factuality, in image/imagination is a gift. In Generative AI, Hallucinations are repeatable variations, but their explanation are a mystery. And mystery, arguably, lies at the heart of Abstraction. The source of its emotional power to reflect our feelings back at us, in a mirror, but darkly.

Can it be Art?

Earlier in this essay I noted the concurrent development of the science of the mind, abstraction and computing during the 1950s and 1960s. Today, this relationship is more relevant than ever. ‘Artificial Intelligence’, ‘Art’, ‘Artefact’, ‘Artifice’, all derive from the common Latin root ‘ars’ meaning skill or technique. They share a connection to human creativity and ingenuity. Art can be used to explore and express the human experience, while Artifice and Artificial are often employed to manipulate and control that experience in various ways. Generative Artificial Intelligence appears to occupy a liminal space between Art and Artifice – between expression of sentient behaviours and a subtler subterfuge of imitation. But having created, reared and educated this technology in our own image, is it any wonder how difficult it is to distinguish between the two?

Learning from the existing cultural canon is how artists are taught. That goes for AI artists as much as human ones. By studying hard over the past few years (but accelerating even faster in the past 12 months), machine learning systems are also beginning to recognise the rules of human aesthetics and predict which aspects humans will find pleasing. The advantage that AI artists have is the speed with which they can learn, along with the sheer number of precedents upon which they can draw.

It’s difficult, therefore, to draw any hard and fast conclusions. Are these tools ‘creative’? Are the creative agents involved valid? Does the creative process legitimise them? Do they have sufficient aesthetic value to earn their stripes as Creative Intelligence? Or is it only through collaboration with a human agent that they can claim that status? In some ways, attempts to draw a clear line between the author and the pen is a redundant exercise. Computer Generated Imagery (CGI) no longer raises eyebrows. We happily buy into the superheroes and spaceships on film. We know they’re not real. But we accept their appearance as necessary elements in a narrative that (we assume) is broadly human-made. Suspension of disbelief is central to any visual simulation. As creators and storytellers, we use tools to craft artefacts – I’m using one now as I type. The difference lies in the degree of automation the machine uses to generate the end result, and how much input the human ‘helmsman’ has in the process. Who is the creator and who the tool. Who is creating movement – the steam engine or the stoker shovelling coal? And frankly, with the current acceleration of AI sophistication, any conclusions we arrive at today may be redundant tomorrow. So, in the meantime, here are some more ‘paintings’ created in collaboration with AI agents. You decide.